Abstract

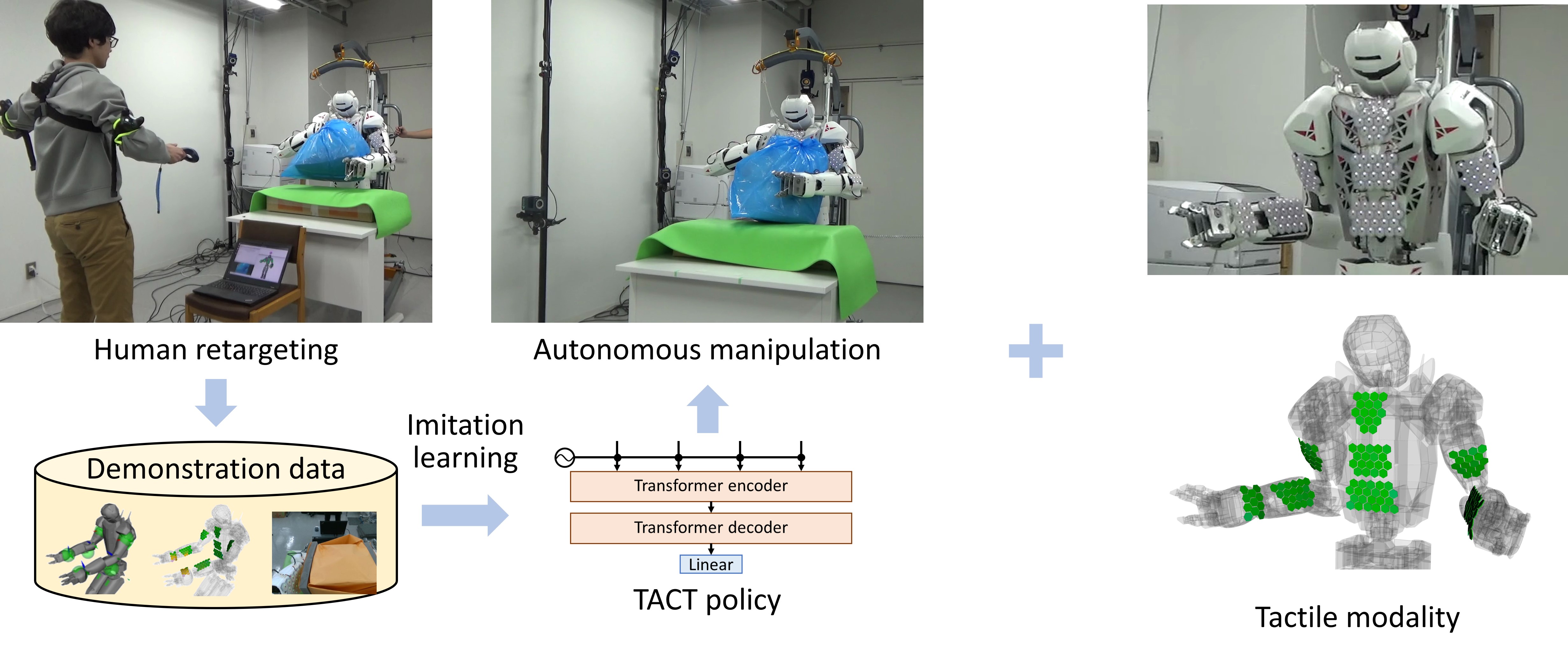

Manipulation with whole-body contact by humanoid robots offers distinct advantages, including enhanced stability and reduced load. On the other hand, we need to address challenges such as the increased computational cost of motion generation and the difficulty of measuring broad-area contact. We therefore have developed a humanoid control system that allows a humanoid robot equipped with tactile sensors on its upper body to learn a policy for whole-body manipulation through imitation learning based on human teleoperation data. This policy, named tactile-modality extended ACT (TACT), has a feature to take multiple sensor modalities as input, including joint position, vision, and tactile measurements. Furthermore, by integrating this policy with retargeting and locomotion control based on a biped model, we demonstrate that the life-size humanoid robot RHP7 Kaleido is capable of achieving whole-body contact manipulation while maintaining balance and walking. Through detailed experimental verification, we show that inputting both vision and tactile modalities into the policy contributes to improving the robustness of manipulation involving broad and delicate contact.

Methodology

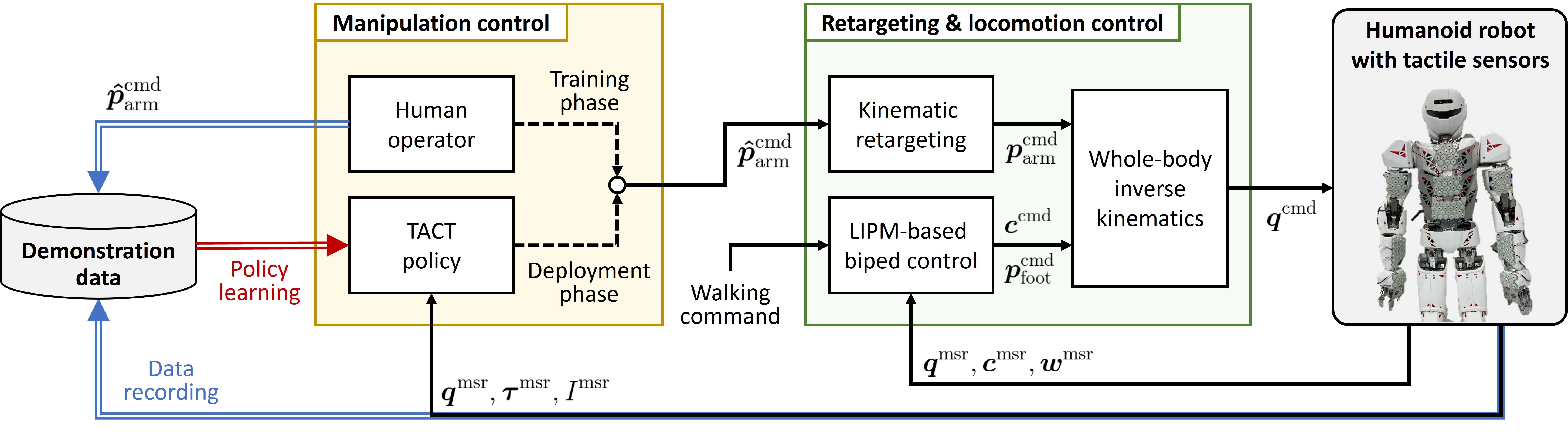

We propose a learning-based control system that enables a humanoid to achieve loco-manipulation with delicate and rich contact. This system is achieved by mounting distributed tactile sensors on the upper body and applying control based on the measurements from these sensors. The core of the control system is a Transformer-based policy that processes multimodal inputs, including tactile, visual, and proprioception (joint position) data, and generates actions for the future horizon. The sensory-motor data necessary for model training is obtained through online teleoperation of the robot, wherein the posture of a human wearing pose trackers on the upper body is retargeted to the humanoid.

The architecture of our control system comprises two distinct layers: an upper layer responsible for generating manipulation motions with tactile feedback, and a lower layer that handles retargeting and locomotion control. In model-based retargeting and locomotion control, it guarantees the physical constraints of the robot, while in learning-based manipulation control, it generates whole-body contact motions that require complex skills.

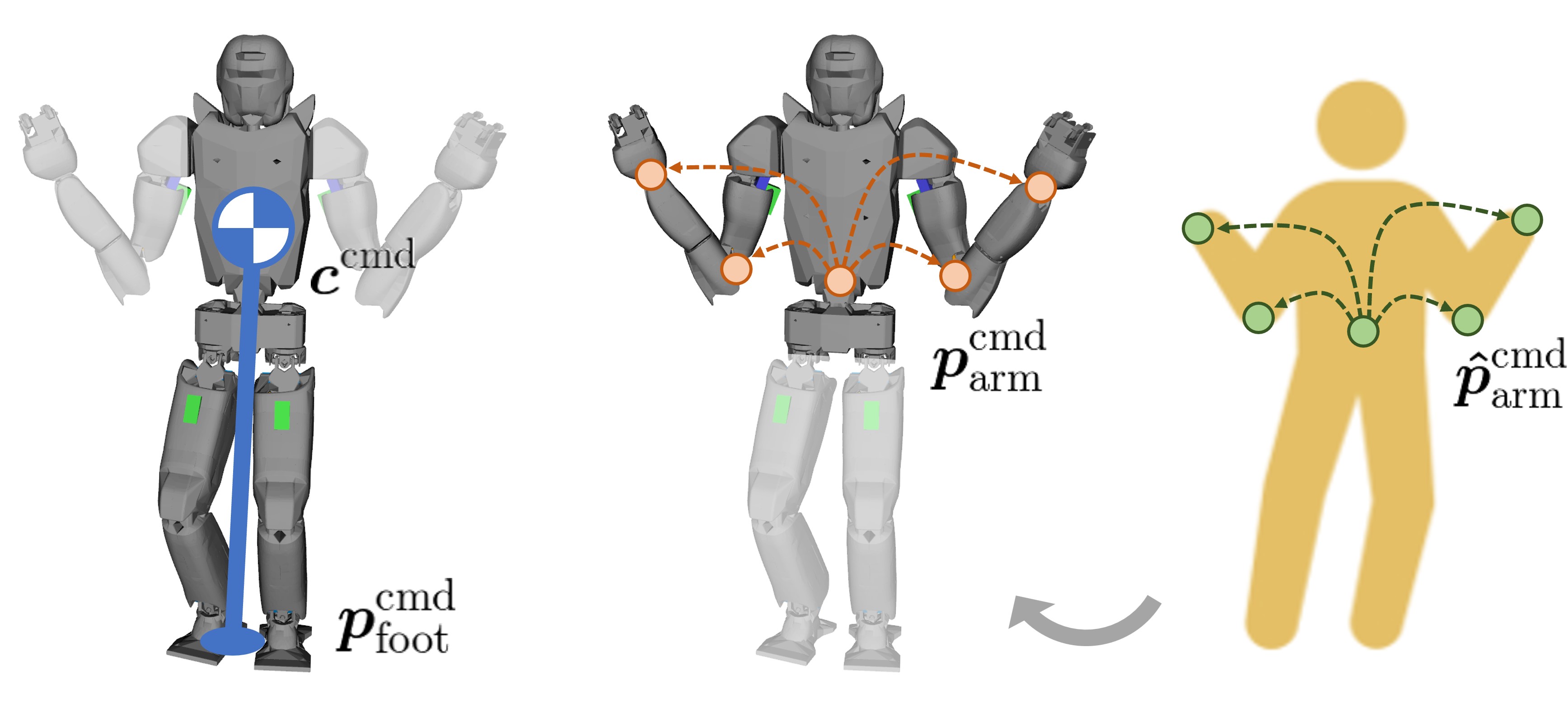

The posture of the human upper body is measured and retargeted to the humanoid in real time. The human operator wears trackers that can measure 3D poses on the waist, elbows, and wrists. The target poses for the robot's elbows and wrists relative to the robot's waist are calculated from the measured poses for the human elbows and wrists relative to the human waist.

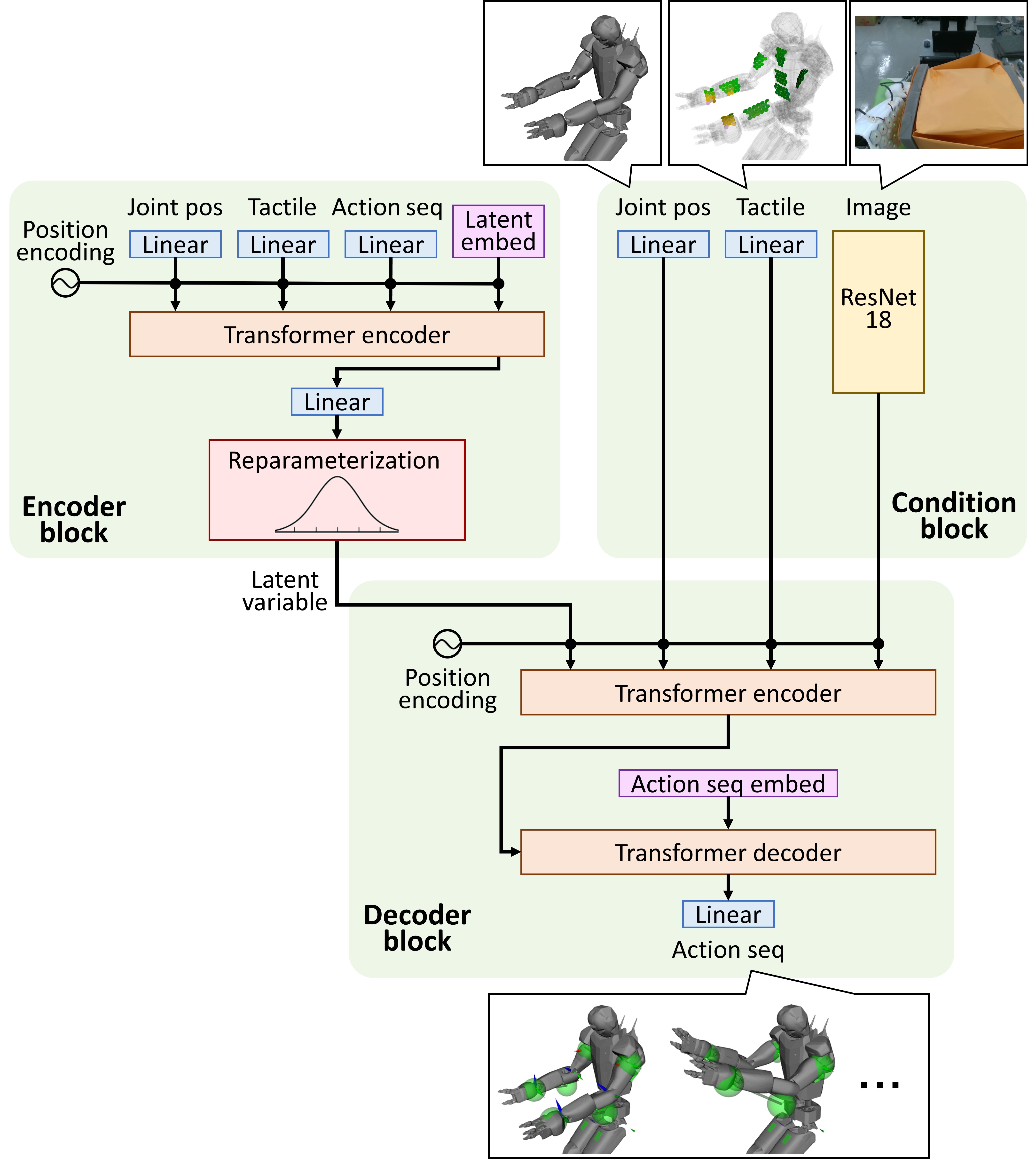

The TACT model is based on a conditional variational autoencoder (CVAE), and consists of an encoder, a decoder, and a condition block. The encoder and decoder are implemented using Transformers, while ResNet18 is used for image feature extraction. The key difference from ACT lies in the inclusion of tactile measurements: they are flattened into a 1D vector, linearly projected into a token, and fed into both the encoder and condition blocks. This allows the Transformer to learn correlations between tactile input and other modalities for whole-body contact manipulation.

Manipulation of a Plastic Bag

The robot lifts a large plastic bag in two different ways; one is to grasp the top of the bag with one hand and hold it with the other arm, and the other is to hold it with both arms from both sides. For each motion, we collected 8 episodes of demonstration data and trained individual policies. It was shown that the proposed method can be applied to manipulation motions in which the robot contacts the object simultaneously at many parts of the body, including the forearm and the chest.

Manipulation of a Paper Box

Since the tactile modality is particularly important for manipulations that require fine adjustment of the contact force, we chose a task in which the robot uses both arms to hold up a fragile box made of thin paper folded and glued with sponge on both sides. To prevent the object from rotating, the robot needs to make contact with the surface of the forearm and wrist, which requires richer contact than pick-and-place with a gripper. In addition, because paper boxes are fragile and easily crushed, the delicate contact must be controlled to avoid applying too much force.

We evaluated the success rate of the same task with the proposed TACT policy and three baseline policies. The first baseline is a policy that replays the human teleoperation data as-is (Replay). The other two baselines are policies that remove the vision modality from TACT (TACT w/o vision) and the tactile modality (TACT w/o tactile, which is identical to the original ACT). The experiment results confirm that policies that handle both visual and tactile modalities, such as TACT, are essential for delicate whole-body contact manipulation.

Simulation Validation

To further evaluate the proposed method, we constructed a simulation environment using the MuJoCo physics engine. The robot was equipped with simulated tactile sensor patches composed of hexagonally arranged cells, replicating the configuration of the real robot. The robot performed a box reorientation task with complex contact transitions, tilting a box on a table by 90 degrees using both arms. The proposed TACT policy outperformed the baselines TACT w/o vision and TACT w/o tactile, showing consistent results with the real-world experiments despite differences in domain and task.

Main Video

Citation

@ARTICLE{HumanoidTactileImitationLearning:Murooka:RAL2025,

author={Masaki Murooka and Takahiro Hoshi and Kensuke Fukumitsu and Shimpei Masuda and Marwan Hamze and Tomoya Sasaki and Mitsuharu Morisawa and Eiichi Yoshida},

journal={IEEE Robotics and Automation Letters},

title={TACT: Humanoid Whole-body Contact Manipulation through Deep Imitation Learning with Tactile Modality},

year={2025},

volume={10},

number={8},

pages={7819-7826},

doi={10.1109/LRA.2025.3580329}

}